📍 NLP의 종류

- major conferences: ACC, EMNLP, NAACL

🔥 Low-level parsing: Tokenization, stemming

🔥 Word and Phrase level

- NER(Named Entity Recognition), POS(Part-of-Speech) tagging, Noun-Phrase chunking, Dependency parsing, Coreference resulution

🔥 Sentence level: Sentiment analysis, Machine translation

🔥 Multi-sentence and Paragraph level: Entailment prediction, Question answering, Dialog systems, Sumarization

🔥 Text Mining

- 글이나 문서 데이터에서 활용가능한 정보나 insight를 추출해 내는 것.

- Document clustering

🔥 Information retrieval: social science와 높은 연관이 있어 추천 서비스에 많이 사용되는 기술

🔥 NLP 트렌드

- 각 단어를 벡터로 나타내 처리 -> RNN계열 모델을 사용(LSTM, GRU) -> Attention module과 Transformer model 사용 -> Self-Supervised Training을 활용(BERT, GPT-3 등)

- 결국 많은 자본과 정보를 가진 전 세계적 기업(Tesla, Google 등)에서 연구가 활발히 진행

📍 Bag-of-Words

- 문장 내 각 단어들을 one-hot vector로 고친 값으로 전부 더하여 나타낸 것.

1. 예시 문장들의 각 단어를 vocabulary라는 공간에 unique하게 넣음

2. unique한 단어들을 one-hot vector로 encoding 함

3. 문장을 one-hot vector들의 합으로 나타냄.

🔥 Naive bayes classifier

- 스팸 메일 필터, 텍스트 분류, 감정 분석, 추천 시스템 등에 광범위하게 활용되는 분류 기법

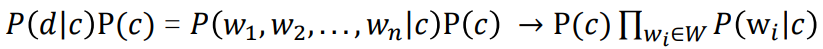

- 특정 document를 d라하고 전체 class를 c라 했을 때, 아래와 같은 식이 나옴

- 또한, P(d|c)를 아래와 같이 나타낼 수 있다.

📍 Word Embedding

🔥 Embedding

- 자연어를 정보의 기본 단위로 해(Sequence)볼 때, 각 단어들을 특정 차원으로 이루어진 공간 상의 한 점, 혹은 점의 좌표를 나타내는 벡터로 변환해 주는 기법

- 비슷한 의미는 가까운 거리에 상충되는 의미는 멀리

🔥 Word2Vec

- 주어진 자료를 바탕으로 특정 언어와의 관계를 정의해 학습

- 주어진 문장에서 가장 의미가 상의한 단어를 찾아냄(Word intrusion detction)

- Word2Vec의 알고리즘은 주어진 문장을 단어로 쪼개고 window sliding을 활용해 문장간의 유사도 및 관련성을 찾아 결과를 도출해 낸다.

🔥 GloVe(Global Vectors)

- 각 입력, 출력 쌍들에 대해서 학습 데이터에서 두 단어가 한 윈도우 내에서 총 몇 번 동시에 등장 했는지를 사전에 계산하여(Pij)연산을 수행함.

- Word2Vec보다 빠름

'인공지능' 카테고리의 다른 글

| [Day 18] NLP - Seq2Seq with Attention & Beam search & BLEU (0) | 2021.02.17 |

|---|---|

| [Day 17] NLP - RNN & LSTM & GRU (0) | 2021.02.16 |

| [Day 15] DL Basic - Generative Model Ⅰ & Ⅱ (0) | 2021.02.06 |

| [Day 14] Math for AI - RNN (0) | 2021.02.04 |

| [Day 13] DL Basic - CNN & Computer Vision Applications (0) | 2021.02.03 |